Objectives

The research activities in the lab are focused on fundamental everyday tasks in three parallel thrusts: human, human-robotic hybrid, and humanoid. The activities of the virtual lab aim at:

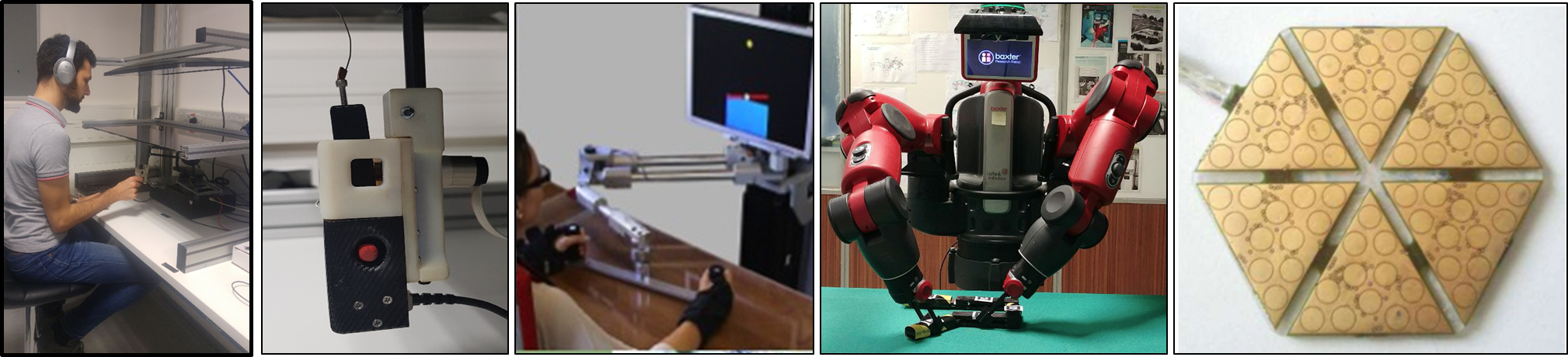

- Measuring and modeling sensory-motor performance during interactions and manipulation tasks for humans and humanoids:

Upper limb somatosensory deficits are common and disabling symptoms after stroke, spinal cord injury and in subjects with neurological diseases, such as Parkinson’s disease and Multiple Sclerosis and have a deleterious impact on several daily-life activities. These sensory abilities are also likely to be affected in the early stage of the disease since they result from a complex process of integration of sensory inputs from different peripheral receptors. Despite their relevance, sensory abilities are difficult to quantify at neurological examination because of the limited sensitivity and reproducibility of the clinical tests (Casadio et al., 2018). Similarly, for sensorized robots and bionics limbs, it is challenging to quantify the applied sensory stimulations and interpret the information correctly, and benchmarks for successful somatosensation are missing. The outcomes of this aim are stable and reliable methods to quantify and model somatosensation and their use on the control of interactions and manipulation tasks. - Augmenting and substituting the somatosensory information to enhance control abilities in people with neurological deficits or with bionic limbs:

The commercially available prostheses and the rehabilitative interventions for people with neurological disability focus on restoring motion. However, real-time sensory feedback is critical for generating desired movements and perform skillful manipulation. Thus, there is an urgent need to understand and mitigate the effects of somatosensory deficits by developing new effective technological solutions for sensory substitution and/or enhancement and integrating this augmented or artificial feedback on an efficient control of movements and forces. Recent years have seen significant advances in developing of bionic devices for advanced peripheral (Cheesborough et al., 2015) and central (Hochberg et al., 2006, McFarland and Wolpaw, 2008) neural prostheses and body-machine interfaces (Mussa-Ivaldi et al., 2011). Several recent studies reported promising results about closing the loop and presenting the users of these bionic devices with touch information (Hebert et al., 2014, Raspopovic et al., 2014, Tan et al., 2014). However, the understanding of what is the important information for effective somatosensory interaction with the world and what is the best way to convey this information to the users is largely missing. The outcomes of this aim are intelligent technologies that can restore, substitute or create somatosensory abilities by providing information on the state of the human or the robotic body and its physical surroundings. We will have reached this goal when we will be able to provide optimal real-time feedback to achieve safely and efficiently control goals, with better or equal performance of the one of healthy humans at their best. -

Developing humanoid robotic able to use somatosensory information in their control actions:

In the past three decades, many solutions to design, engineer, and manufacture tactile sensors for robots have been discussed in the literature (Dahiya et al., 2010). Thank to their small size and the availability of low-cost read-out electronics, tactile sensors that are based on the capacitive principle have been widely adopted for a variety of applications (Billard et al., 2013, Maiolino et al., 2015). This allows designers and engineers to develop ad hoc solutions (e.g., robot fingertips for prosthetic devices), and obtain sensory arrays with hundreds or thousands of tactile elements to cover wide areas of a robot’s body (Schmitz et al., 2011). Recently, robotics researchers have witnessed a decisive paradigm shift: robotic devices are evolving from tools difficult to use and suitable for executing stereotypical and simple behaviors to versatile intelligent machines with a certain degree of autonomy, that physically interact with humans, and operate in symbiosis with them. For the continued progress in this direction an additional shift is critical in sensing and control: from vision dominance to touch sensing and from control favoring contact avoidance to physical interaction and co-manipulation. We focus on two specific – yet intertwined – requirements needed to achieve such a vision: (1) the use of embedded sensory systems such as large-scale robot skin or distributed inertial information to enable the development of physical human-robot interaction behaviors (Baglini et al., 2014); (2) the design of algorithms allowing for the extraction and the representation of tactile and proprioceptive information originating from both purposive behavior and the interaction with the environment in order to allow the robotic device to operate in highly dynamic scenarios (Cannata et al., 2010).